HFS+ Data Recovery Algorithm: Comprehensive Guide

Unlock the Secrets of HFS+ Data Recovery Algorithm! Curious about how data recovery works for HFS+ (Hierarchical File System Plus) file systems? In this article, we’ll delve into the intricacies of the HFS+ data recovery algorithm. Whether you’re a tech enthusiast, IT professional, or simply interested in storage technologies, this comprehensive guide will provide you with insights into how HFS+ data recovery algorithms work. From understanding the structure of HFS+ file systems to exploring the methods used for data recovery, we’ll cover everything you need to know to decrypt the secrets of HFS+ data recovery.

- 1. Advantages and peculiarities of HFS+

- 2. HFS Plus architecture

- 3. Data recovery in Time Machine

- 4. Recovery algorithm for HFS+

- Conclusion

- Questions and answers

- Comments

1. Advantages and peculiarities of HFS+

The main distinction of HFS+ is using the 32-bit architecture instead of the 16-bit in HFS. The older addressing system was a serious limitation by itself as it didn’t support work with volumes containing more than 65 536 blocks. For example, on a 1 GB disk, the allocation block size (cluster) under HFS is 16 KB, so even a 1 byte file would take up the entire 16 KB of disk space.

Just as its predecessor, HFS+ uses the so-called B-tree to store a major part of metadata.

HFS+ volumes are divided into sectors (called logical blocks in HFS), usually 512 bytes in size. One or more sectors make up an allocation block (cluster); the number of allocation blocks depends on the total size of the volume. The 32-bit addressing allows to use over 4 294 967 296 clusters compared to the 65 536 available for 16 bit. In comparison, the two file systems differ by:

- file name length: in HFS: 31, in HFS+: 255;

- file name encoding: HFS: Mac Roman, HFS+: Unicode;

- record size: HFS: 512 bytes, HFS+: 4 Kbyte;

- and the maximal number of files has increased: HFS: 2^31, HFS+: 2^63.

| Advantage or Difference | Description |

|---|---|

| Support for large files | HFS+ supports working with large files, making it useful for storing multimedia and other large data files. |

| Journaling file system | HFS+ offers journaling, which reduces the risk of data loss during system crashes or sudden power outages. |

| Unicode support | HFS+ supports Unicode encoding, allowing files with names in different languages to be stored. |

| Optimized for use in macOS | HFS+ is designed specifically for macOS, providing high performance and stability on these devices. |

| Defragmentation | HFS+ automatically defragments files, enhancing performance during data reading and writing. |

| Metadata support | HFS+ supports extended metadata, helping to better organize and manage files. |

| Compatibility with Time Machine | HFS+ is fully compatible with Time Machine backup function in macOS. |

| Limitations on modern system support | HFS+ lacks the functionality and reliability of APFS (Apple File System), which is the standard in newer versions of macOS. |

🔝 Top Tools to Recover Data from HFS+ Drives. How to Recover a MacOS Extended Drive 🍏

2. HFS Plus architecture

HFS Plus volumes are divided into sectors (called logical blocks in HFS), usually 512 bytes in size. These sectors are then grouped together into allocation blocks (similar to clusters in Windows) which can contain one or more sectors. The number of allocation blocks depends on the total size of the volume. HFS Plus uses a larger value to address allocation blocks than HFS, 32 bits rather than 16 bits. The file system uses Big Endian encoding.

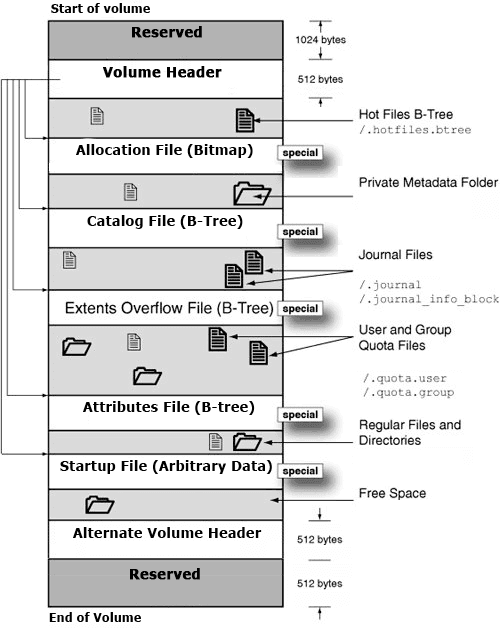

To manage the process of data allocation on the disk, HFS+ stores special service information known as metadata. The following elements are most important for the proper operation of the file system and are of special interest when searching for missing data:

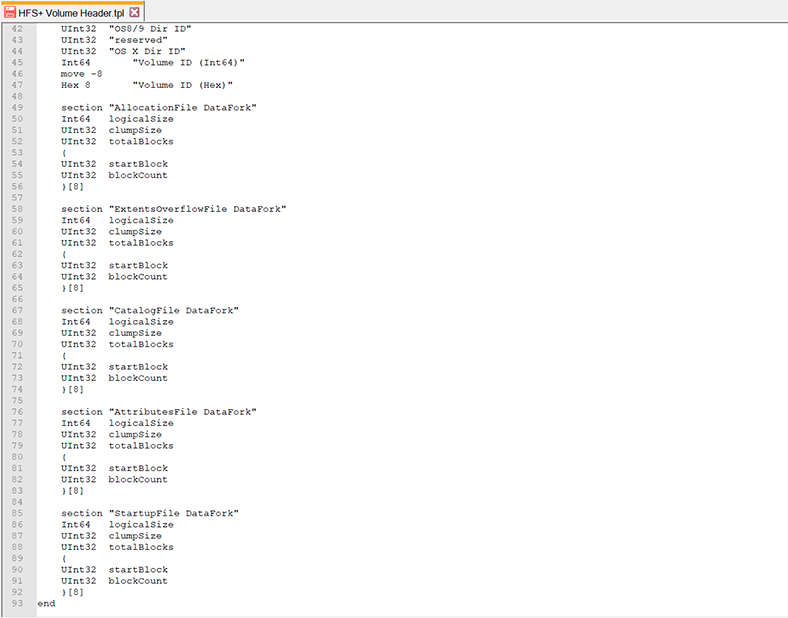

- Volume Header. Has a table-type structure, and makes use of extent records (Extents);

- Allocation File. Structured as a bitmap, it makes use of extent records (Extents);

- Catalog File. Has B-tree structure, and makes use of extent records (Extents);

- Extents Overflow File. Has a B-tree structure;

- Bad block file. Has a B-tree structure;

- StartUp file. Fixed size;

- Journal. The disk area with a fixed size and location.

The operating system includes more other structures, but the ones we have listed are of primary importance for data recovery. In order to continue examining the structure, we need to study the basic notions, B-tree and extents.

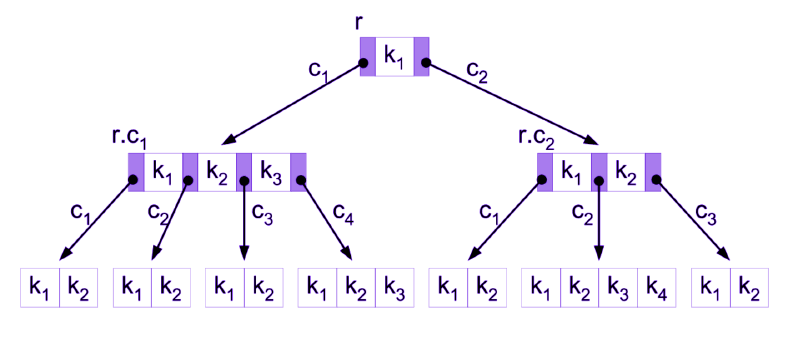

What is a B-tree?

To store a part of information, HFS+ uses B-trees. They are required to enable writing certain amounts of data (for example, 100 MB) into fixed-size blocks (for example, 4 KB). In this case, the first block doesn’t contain the data itself, but the links to the following blocks which may either contain links to another level of blocks, or contain the actual data. The tree elements containing links are known as nodes, and zero level elements containing data are referred to as leaves.

What are extent records and Extent Overflow File?

To store information on the number of blocks occupied by a file, HFS+ may use from 0 to 8 extent records. Every record contains a link to the first block containing data, and the number of sequential clusters used to store data. If a file is too fragmented and 8 records are not enough to describe all of its parts, the remaining fragments (elements) are written to the file with additional extents – Extent Overflow File.

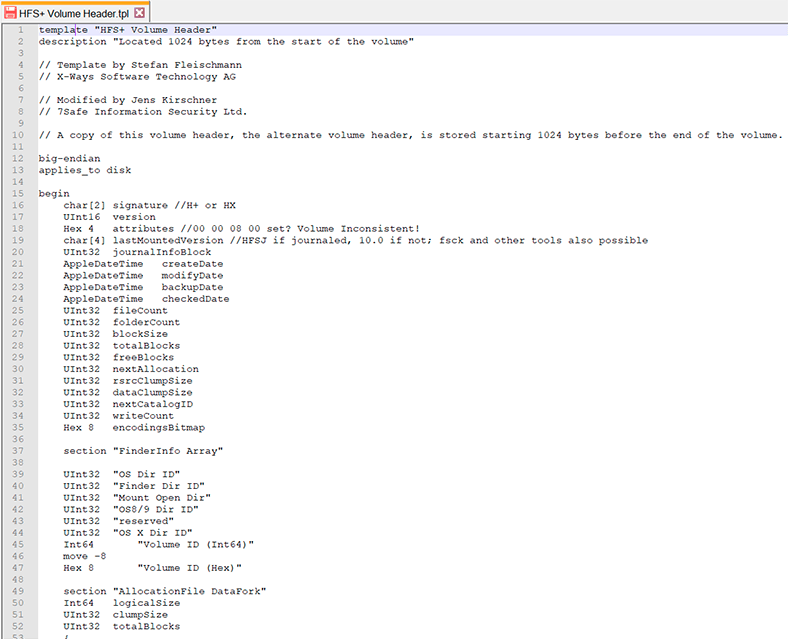

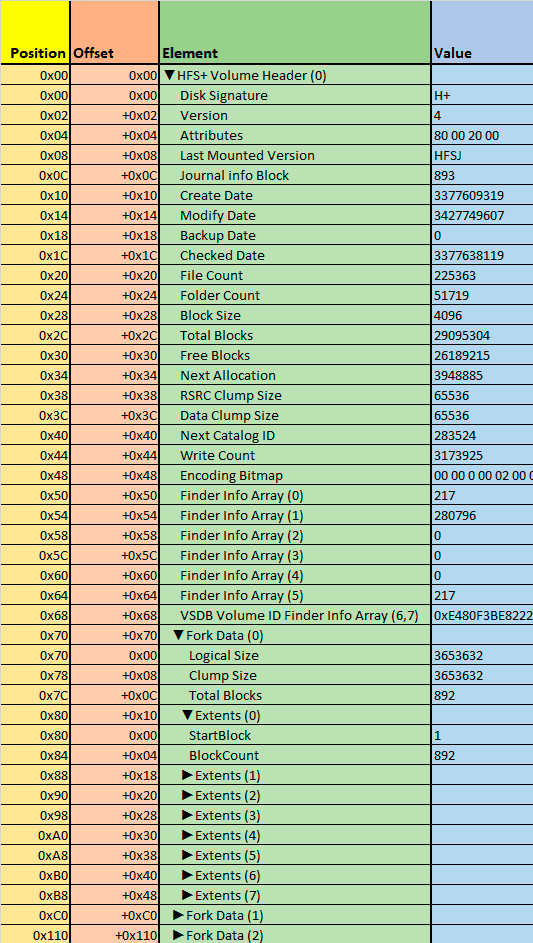

Volume header

Volume header is always located in the second sector of the disk (counting from the beginning) and contains general information on the volume. For example, the size of allocation blocks, a timestamp that indicates when the volume was created or the location of other volume structures: Catalog File, Extent Overflow File, Allocation File, Journal etc. The second to last sector of the disk always contains Volume Header Copy, that is, the copy of the Volume Header.

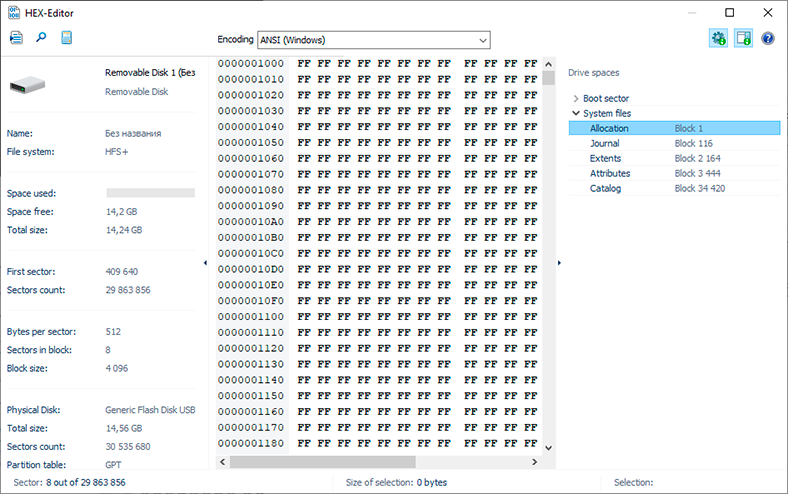

Allocation file

It contains data on occupied and vacant allocation blocks. Each allocation block is represented by one bit. A zero value means the block is free and a one value means the block is in use. Sometimes, this structure is referred to as bitmap. The allocation file can change size and does not have to be stored contiguously within a volume. All information on the elements of the file is stored in the Volume Header.

Catalog file

It stores a major part of data on allocation of files and folders on the disk, and it’s actually a large B-tree structure.

In HFS Plus, this file is very similar to the catalog file in HFS, the main difference being the field size. Now it is bigger and contains more data. For example, it allows to use longer 255-character Unicode names for files. In HFS, the record size is 512 bytes, compared to 4KB in HFS Plus for Mac OS and 8 KB for OS X. The fields in HFS have fixed size, while HFS Plus lets them have varied size depending on the actual amount of data.

Most fields store small attributes which can be fitted into 4 KB of space. For larger attributes, additional extents are used (the maximum number available is 8 extents, and if any more are needed, they are saved to Extent Overflow File). The extents contain links to the other fields where data of the larger attribute is stored.

StartUp file

This file is intended for operating systems which don’t support HFS or HFS Plus, And is similar to boot blocks in HFS volumes.

Bad blocks

This file contains data on all relocated (faulty) blocks.

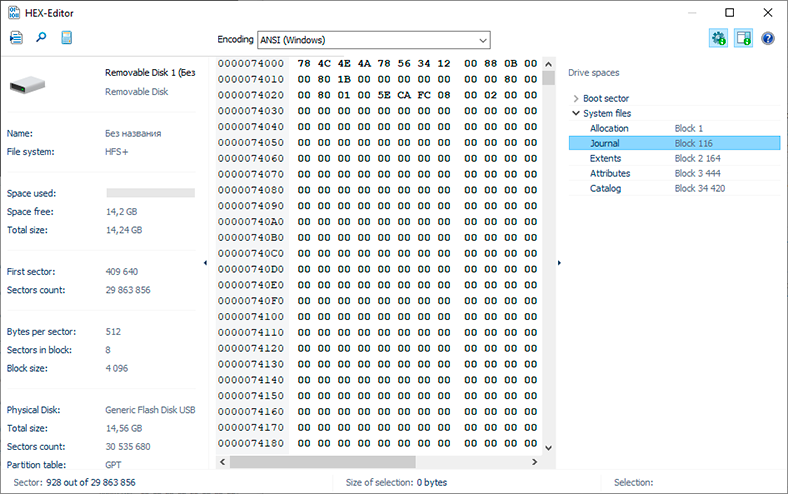

Journal

Journal is not a file but rather a fixed-size area (set of blocks) on the disk. Links to the first blocks and the size of the area are stored in the Volume Header. Before any changes are written to the disk, HFS+ writes them to the journal, and only then to the system files. In case of a power cut happening during the writing operation, the file system can be restored.

It should be noted, though, that the journal size in HFS+ is limited and its contents get overwritten regularly. The boot volume journal in Mac Mini is usually overwritten within 5-10 minutes, and the time before it gets overwritten in MacBook is about 30 minutes. If Time Machine is enabled, this time is reduced to 20 seconds.

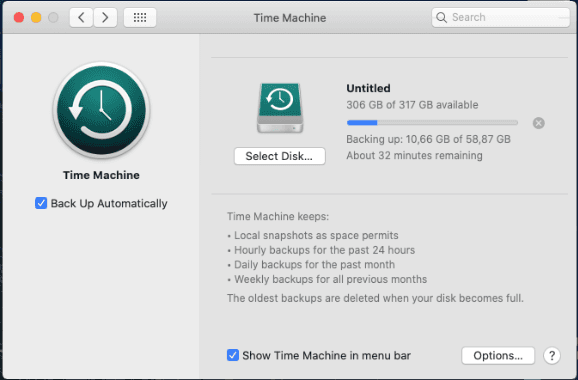

3. Data recovery in Time Machine

Beginning with Mac OS X Leopard, the operating system includes Time Machine. This utility creates backup copies of files to record all changes that happen to the file system. All these things help users restore the entire operating system, several files or an individual file in exactly the same form it was in at a certain moment of time.

Allocate a separate disk for Time Machine to use. Apple manufactures a special device, Apple Time Capsule, which can be used specifically as a network drive for storing Time Machine backup files. Time Machine can be used with any USB or eSATA disk. When you start it for the first time, Time Machine creates a folder on the specified backup (reserve) disk to contain all the data.

Later, Time Machine will only copy the modified files. Generally speaking, as long as Time Machine is used for a disk, recovering lost data is not much of a problem.

4. Recovery algorithm for HFS+

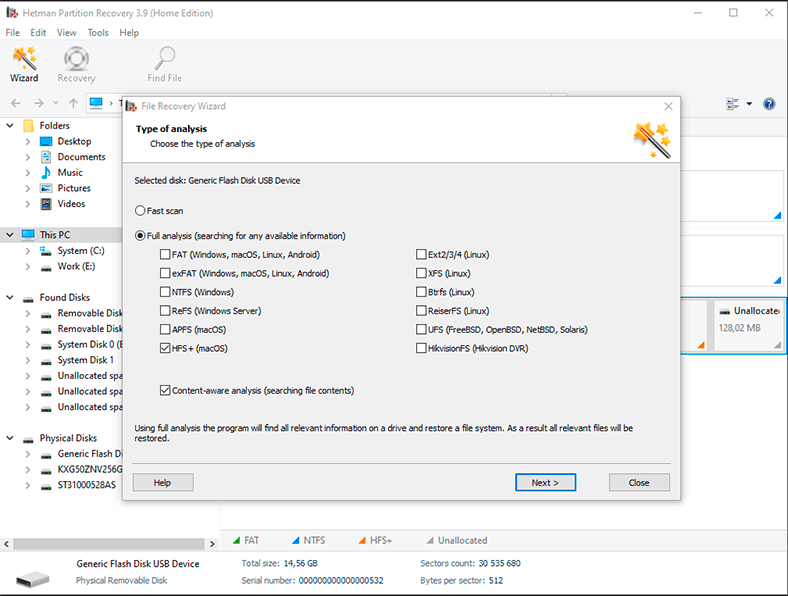

Recovering data from HFS+ file system is more difficult than from other file systems. One of the aspects causing difficulties is that HFS+ uses B-trees to store most volume metadata on allocation of files. After a file is removed, the B-tree is updated immediately, so the information on where the removed file was located is lost at once.

Our program lets you see the storage device and the HFS+ structure in Windows without using any extra software or drivers.

| Step | Action Description |

|---|---|

| 1. Install the program | Download and install Hetman Partition Recovery on your computer. |

| 2. Connect the drive | Connect the HFS+ drive to your computer if it is not an internal one. |

| 3. Launch the program | Open Hetman Partition Recovery and select the HFS+ drive from the list of available disks. |

| 4. Scan the disk | Start the scanning process to detect lost or deleted files. |

| 5. View recovered data | After scanning is complete, review the recovered files in a format convenient for you. |

| 6. Select files for recovery | Select the files you want to recover and click the recovery button. |

| 7. Save recovered files | Save the recovered files to another drive or external storage to prevent data overwriting. |

While running a full analysis scan, its algorithm allows to exclude these elements when searching for lost data, and recover the information we need.

If you choose a fast scan, the program reads the Volume Header or its copy, gains access to the Catalog file and location of the journal on the disk. If the blocks related to such files are not overwritten yet, it will read them and recover the data.

If blocks of the removed file haven’t been overwritten, this method lets you recover the file completely. And even if overwriting has taken place, the file data can still be found in the journal, or the file will be recovered partially.

The algorithm beyond full analysis allows the program to exclude certain elements while searching for deleted data, so the program will rebuild the disk structure and display the deleted files, even if Volume Header and Catalog file have been overwritten partially.

Conclusion

All in all, it can be said that HFS+ is an obsolete file system, which has come to exist as the optimized version of an even older file system, HFS. At the moment, HFS+ is replaced by Apple File System.

In terms of performance, safety and reliability, HFS+ fall behind APFS considerably, so the issue of data recovery becomes especially relevant. Information rarely disappears without trace, so knowing well how the file system works, you may hope to recover even the elements initially considered to have been lost for good.

🔝 Top Tools to Recover Data from APFS drives or how to recover Apple MacOs disk in Windows 🍏